If you’ve been dabbling in mobile marketing for 2+ years now, you are aware of the ever increasing automation taking over all the advertising platforms. Long gone are the days of crunching data to find the perfect bid for each of your 20+ micro-segmented audiences in order to deliver a perfectly personalized experience while yielding maximum ROAS. Today, the more you try to narrow down your ideal target audience, the bigger the punishment you receive – literally, on your bill.

That’s why now more than ever, creative is the king. The creative itself acts as your targeting lever – one of the two you can still pull as a UA manager, the second being your optimization events. But to succeed in scaling your UA with creatives, you also need to have a solid creative testing methodology in place – the queen to accompany the king.

So, without further ado, below we list 6 golden rules of creative testing, which are applicable no matter what vertical or team size you are in:

DOS

Integrate creative testing into an agile process

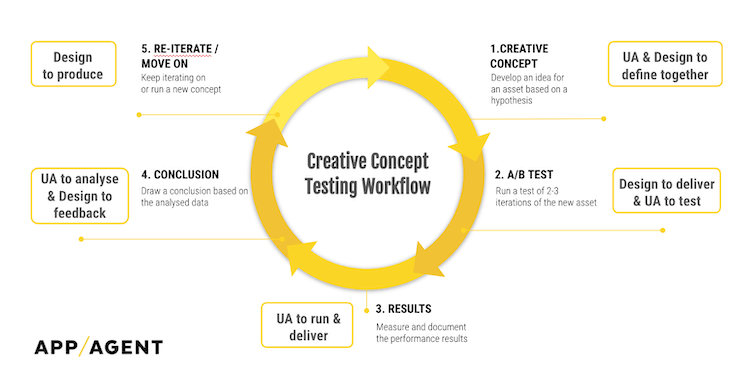

Creative testing cannot be a standalone discipline and needs to continuously provide insights from completed tests to feed the ad creative ideation/conceptualization and thus build new hypotheses and/or variants of existing concepts.

Focus on proving a concept first before moving into extensive iterations

It is, however, important to test a few (2-3) significant iterations in order to avoid missing out on a potential winner due to a “wrong” element, as the difference between a winner and a loser is sometimes (quite often) very tiny.

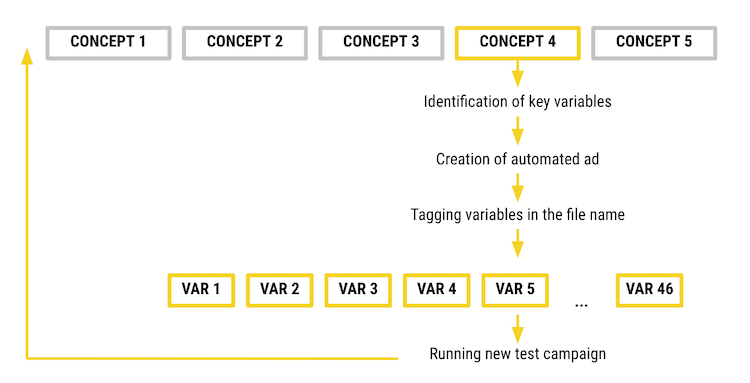

Here is a depiction of what that iterating process might look like:

Source: AppAgent

If you are new to creative testing and don’t know where to start, we recommend a weekly cadence of 5 new concepts/week if you’re spending anywhere between $100k and $500k/month.

Then, once you find a winning concept, you want to drill down into detailed iterations of it by identifying the key variables and if possible even automating their production.

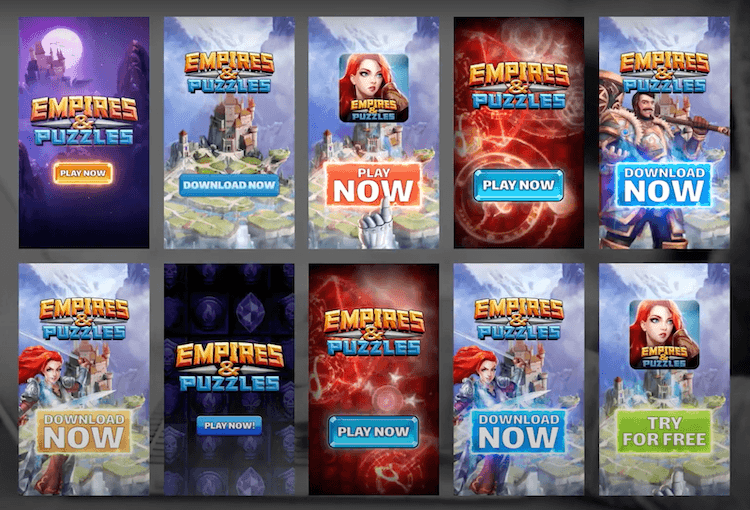

To illustrate that, below are a few examples of different packshots we have done at AppAgent for Empires & Puzzles in the past using this methodology. You can use video ads automation for this task.

Source: AppAgent

Reach statistical significance, always

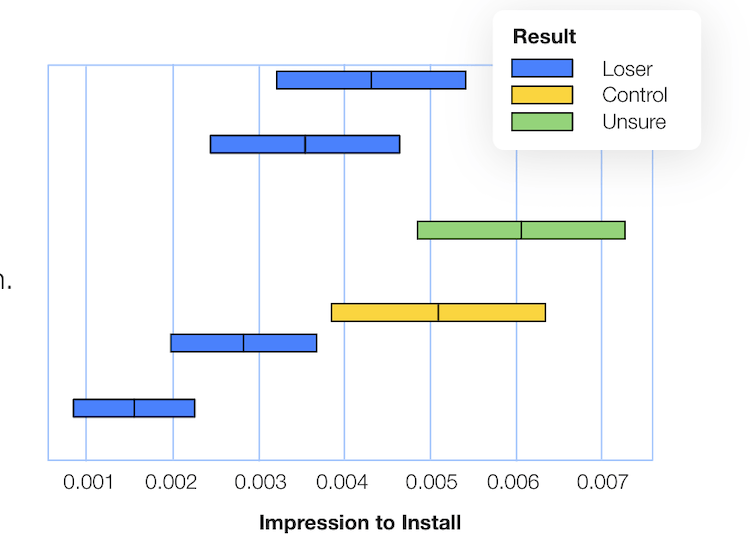

To be able to make definitive and reliable conclusions you will need to reach statistical significance and therefore must allow enough time to pass to accumulate satisfactory volumes. In terms of MAI campaigns (optimising towards app installs), we have found that roughly 300 installs will assure over 90% confidence. Thus, as a rule of thumb you should aim for that to be your minimum. Of course, this changes once you move to AEO or VO setup and this will further vary depending on the type of app and monetization you have.

Source: AppAgent

Eliminate bias, as much as you can

🔍 Master Onboarding with JTBD & MaxDiff

Discover how to optimize your app’s onboarding process using the Jobs-to-be-Done framework and MaxDiff analysis.

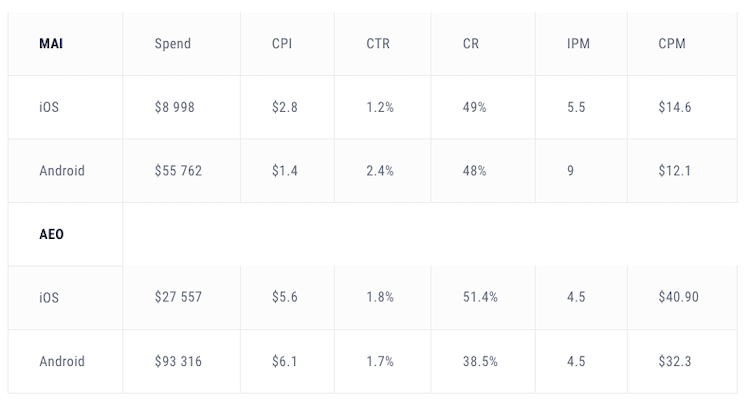

Download nowBelow is an example of how these metrics vary between the two setups from campaigns we ran for Wargaming:

Source: AppAgent

Evaluate accordingly

In other words, make sure you adapt the KPIs you are looking at and evaluating by, as well as their benchmarks, by the platform and format you are testing on. For instance, on YouTube you want to look at the View Rate as one of the top funnel KPIs, which is not a KPI you would consider when evaluating a Facebook ad.

Different channels also tend to bring different quality of users, hence you might need different volumes to reach statistical significance when testing for bottom funnel metrics. How to test new mobile user acquisition channels is well described in this summary.

Connect the dots throughout the UA journey

As old and over-repeated as this is, tons of apps still fail at it. Check out the data below and make a judgement for yourself on how important it is to create a consistent user experience on the acquisition journey. That is, maintaining the same look & feel and evoking the same emotion by replicating key elements and colors from the very first impression of the ad, through to the store, and to the app itself.

Now that we’ve covered our 6 major DOs, let’s take a look at 3 definitive DON’Ts that we’ve come across with many non rookie mobile UA teams:

DONT’S

Forgetting to look at the top funnel KPIs

It is absolutely crucial to consider the full funnel performance when evaluating any test. Not considering top funnel KPIs and only looking at the bottom of the funnel and profitability is extremely common. Looking at primary top funnel KPIs will not only allow for more reliable comparisons but also save a significant amount of budget as success/failure can be seen much faster.

Primary KPIs to consider: Scale, CTR, IPM / IR, ROI

Secondary KPIs to consider: Relevance score & engagement (likes, comments, etc.)

Interrupting the test prematurely

We get it, time is money and you want to make sure that you are not wasting any of it, but impatience can lead you towards the wrong conclusions.

As a rule of thumb you should always run your test for a full 7 days, given that all apps experience weekly seasonality it is important to do this. Ideally, you also want the creative to exit the learning phase, so as to allow for reliable, optimized results.

This, of course, is provided that your creatives are not absolute losers – no need to wait for 7 days if you haven’t seen an install beyond the first $100 spent.

Creating limitations through counterproductive goals

Creative testing is a numbers game and, as a rule, includes losing many times before finding the next big win. The trick is not to avoid losing at all costs but, on the contrary, to lose fast and find a winner that will be able to compensate for all the losses accumulated on the way.

Finally, as the cherry on top, we have a few tips for you on how to nail collaboration between the UA & creative teams, so that they can find the most success working together:

Appoint a main responsible person on each side

As with every work process, it is vital to clarify responsibilities from the get go.

Meet weekly

You must realize that this is a fluid discipline with lots of things overlapping between the two teams and close collaboration with open communication is absolutely crucial.

Review and translate the data for the creative team

As a UA manager, it is your responsibility to take the time out of your day to translate the data into actionable insights that the creative team will understand.

Check in on progress early to avoid misalignment

There is nothing more frustrating for both sides of the table than meeting at the end of the week to review the produced assets and realizing that they are the opposite of what you wanted.

Look back at the M/Q together and brainstorm

Make sure you do a regular retrospective meeting. Whether that is monthly or quarterly, this will help you make sure you are always taking the learnings forward with you.

Here is a visualization of what that process looks like:

Source: AppAgent

Thanks for reading this all the way through and remember: continuous creative testing is the pillar of successful UA; always produce new ads. And if you don’t have the capacity to do so in-house, don’t hesitate to get in touch with us at AppAgent!

Last but not least, as promised, here is the webinar record.

You can also download an eBook with 33 pages of our knowledge regarding creative testing, real-life examples showing just how much of a difference using the right creative can make to your business economics.

Good luck!