TikTok is making it easier for users to filter videos that could be deemed unsafe for younger audiences.

Addressing criticism over security and user exposure to potentially harmful content, the company has already added parental controls.

But the latest addition means users themselves can decide if recommended content is suitable for them or not.

While the For You feed was designed to enable users to explore new content from creators they already follow or like, they can now use the “not interested” tool to skip videos from a creator.

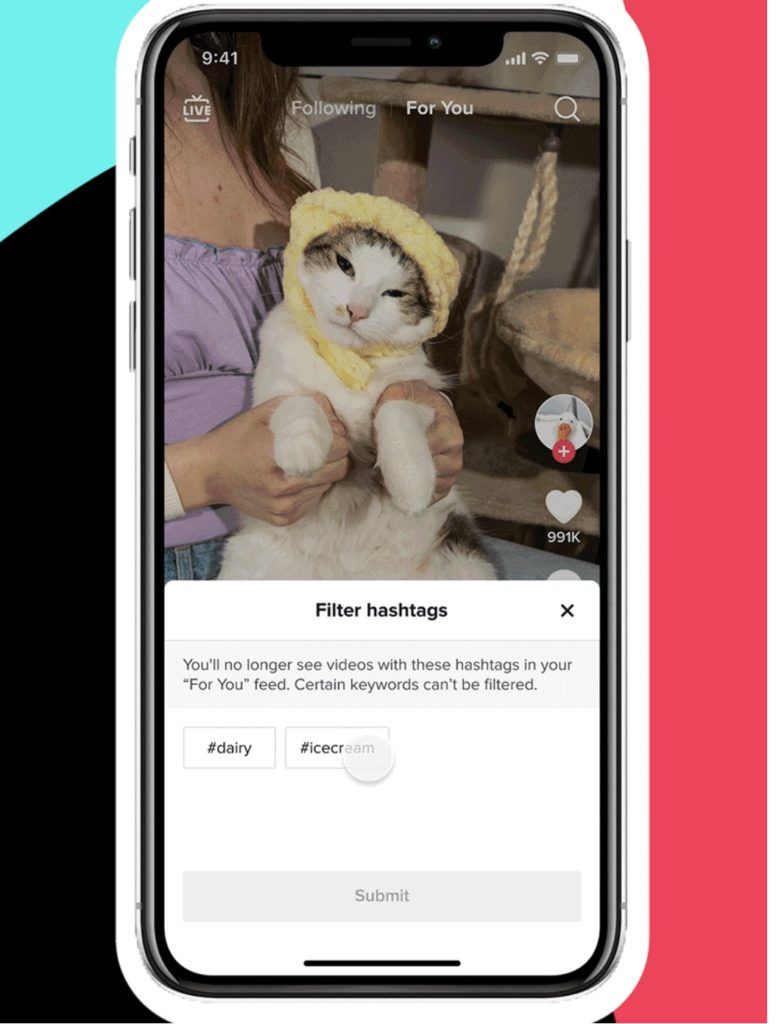

TikTok is also developing ways for users to auto-filter videos with words or hashtags they prefer not to see. For example, a user who may be on a diet may not want to see videos relating to “ice cream” and could hide these by using the new feature.

The app has been working on ways to filter down on content that could be potentially harmful such as extreme fitness or dieting videos.

“We’re also training our systems to support new languages as we look to expand these tests to more markets in the coming months. Our aim is for each person’s For You feed to feature a breadth of content, creators, and topics they’ll love,” TikTok wrote.

Over the next few weeks, it also plans to launch a system to organise content based on thematic maturity. In other words, users aged 13 to 17 will not be shown certain mature-themed videos, either because it is too frightening or sexualised or otherwise inappropriate.

TikTok said it’s also working on ways to offer more detailed content filtering for its community.